Multiplayer Browser Game

This project aimed to investigate real-time networking between servers and clients, while also addressing latency issues. It was developed using HTML, CSS, TypeScript, JavaScript, and Docker. The project was quite intricate, involving latency compensation and communication between servers and clients, as well as rendering a game on the server without direct client access.

The front end of the project was constructed using HTML, CSS, JavaScript, and a library called Three.js. The front end serves as a simple client, with the server controlling everything while the client merely sends inputs to the server while rendering the data received from it. The front end captures snapshots of data from the server and displays the game state in a 2D context using Three.js. Every tick on the server transmits a snapshot of the current state, encompassing all renderable objects, to the client. This communication between the server and client utilizes WebSockets. Ideally, a UDP socket would be preferred, but WebSockets are the only socket protocol available for web applications running within a browser.

The backend of the project is built using Node.js, TypeScript, Three.JS, and Docker. It establishes a WebSocket server that clients can connect to to transmit their inputs. During its runtime, the server processes these inputs and periodically sends updates to all clients. This update frequency, known as the tick rate, refers to the number of updates the server transmits to clients every second. Currently, the server operates at a 20Hz tick rate, resulting in the server sending a snapshot of the game state to the clients every 50ms. There are two primary reasons behind the server’s low 20Hz tick rate. Firstly, the game itself doesn’t necessitate rapid movements or quick reflexes. It’s a straightforward top-down 2D game. Secondly, the server is being run on Node.js using JavaScript. Fortunately, this isn’t a significant concern for this project since the game is relatively slow and simple. However, for a fast-paced, twitchy game, Node.js and JavaScript may be insufficient to update the game state faster than the tick rate. Additionally, there’s a potential risk of garbage collection-related lag spikes in the game. If the game required a faster tick rate, a low-level C language, such as C++, would be a preferred choice.

To begin, the client establishes a connection with the server, transmitting initial data such as the player’s name. The server then creates a new player in the world, assigning it a universally unique identifier (UUID). Once the player object is generated, the server sends a serialized JSON object containing the player’s details and UUID to the client. The client receives this information, constructs a player object for rendering, and incorporates the UUID into its input data. After the client-side player is rendered, it closes the initialization socket connection and establishes a new one. This new WebSocket connection facilitates communication between the client and the server. The client transmits inputs to the server, which processes them. The server searches the game scene for a player object with the UUID signed by the input packet and updates its data in its update loop. On the next server tick, it collects all renderable objects and serializes them into a compact JSON object array. This array is then transmitted to all clients connected to the server. Upon receiving the server data, the client updates the relevant objects, considering the difference between the last and current server ticks. Finally, the client renders the scene.

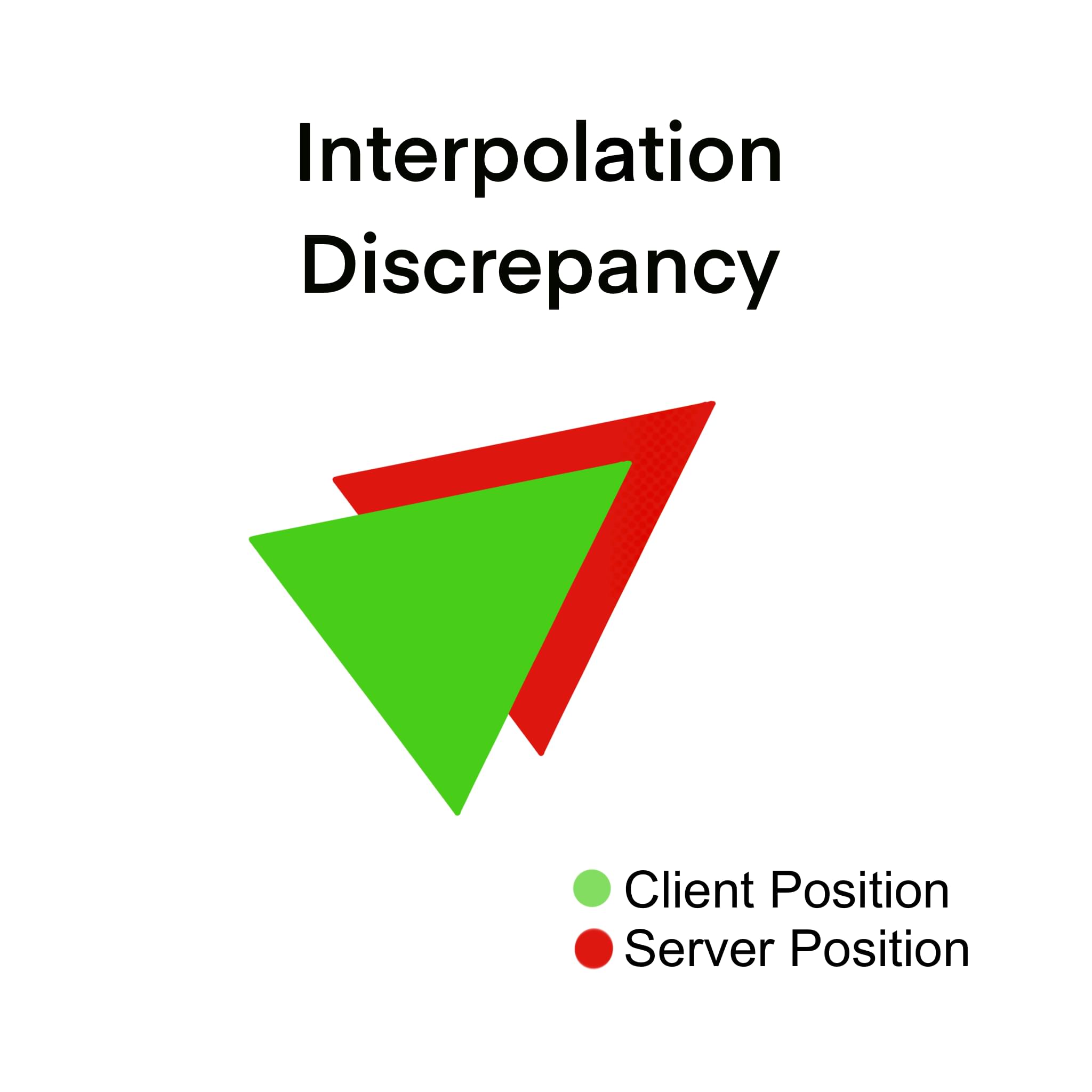

One of the most challenging aspects of the project was developing an effective interpolation algorithm for interpreting movement between the last and current server ticks. Without this interpolation, the movement appeared choppy, as the client rendered at 20 frames per second (fps) or the same as the server tick rate. This resulted in a lack of smoothness, necessitating the use of interpolation to enable the client to render at 60 fps while maintaining synchronization with the server state at 20 Hz. However, this interpolation also introduced a slight delay between the client’s rendering and the server’s state, causing a discrepancy between the client’s and server’s rendered scenes.

This issue arises when the player object’s collision differs slightly from what the client is displaying. While this may not be a significant concern in high tick rate games, it was a notable difference in this small project.

Regrettably, I was unable to test the latency compensation between the server and the client because I never published this project on the open web. The primary reason for this decision was the cost associated with running an AWS EC2 instance. I was unwilling to pay more than five to eight dollars per month for a project that would likely receive minimal viewership. Consequently, I couldn’t test the latency compensation algorithm. When running locally, there’s hardly any latency, so it’s not a reliable indicator of a real-world scenario between a client and a server on the open web where latency can reach upwards of 80ms or more.